You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

Here's a very fair question, if you believe that our company will finally, yes finally show revenues that outpace expenses by the 3rd quarter of 2026, no matter from what source, for example, high speed boats that Donald's boy failed to stop across the Pacific etc, well please post a laugh....

Just trying to lighten the mood, many are feeling the pressure of the market at present, that's a very fair assumption.

To be perfectly honest, with no revenue and capital raisings, it's sort of understandable that we currently sit south of 0 20...hang in there, progress is being made and yes, I'm quietly peeved as well.

Tech

Just trying to lighten the mood, many are feeling the pressure of the market at present, that's a very fair assumption.

To be perfectly honest, with no revenue and capital raisings, it's sort of understandable that we currently sit south of 0 20...hang in there, progress is being made and yes, I'm quietly peeved as well.

Tech

Food4 thought

Regular

as a small bit of insight and 20 / 20 vision I have held stocks then dumped them when they fell south .....

only to see them fricken fly a short time after I sold them

So we invested in this company because we thought the tech was something good for the future ,

for the world as a whole, power saving, cheaper and better, smarter , whatever you thought ,

so hang on to that thought ..

Because if you sell that's it, you are done, and probably at a loss.

just hang in there another 6 months and see just how good it gets.

only up from here

only to see them fricken fly a short time after I sold them

So we invested in this company because we thought the tech was something good for the future ,

for the world as a whole, power saving, cheaper and better, smarter , whatever you thought ,

so hang on to that thought ..

Because if you sell that's it, you are done, and probably at a loss.

just hang in there another 6 months and see just how good it gets.

only up from here

Great !!

www.linkedin.com

www.linkedin.com

#gaisler25yearsinspace | Frontgrade Gaisler

We are proud to announce Sean Hehir, Chief Executive Officer of BrainChip, as one of our speakers at Gaisler's First 25 Years in Space event in February 2026. #Gaisler25YearsInSpace

Fullmoonfever

Top 20

@Diogenese

If you could find time, can you pls run your eye over this patent that just popped up by SNAP Inc, you know, Snapchat etc parent company.

The old "Brainchip Akida is a fast learner" article gets listed in the cited info amongst some other citations.

Any relevance as a nod to inspiration for this patent lodgement, cross over of thinking, design etc or just std protocol?

Cheers

If you could find time, can you pls run your eye over this patent that just popped up by SNAP Inc, you know, Snapchat etc parent company.

The old "Brainchip Akida is a fast learner" article gets listed in the cited info amongst some other citations.

Any relevance as a nod to inspiration for this patent lodgement, cross over of thinking, design etc or just std protocol?

Cheers

smoothsailing18

Regular

Congratulations again to winners in the Product categories in the 15th annual Best in Biz Awards: Addigy Adobe ADP Aha! Applied Systems Asensus Surgical Atombeam Technologies Aviatrix BrainChip… | Best in Biz Awards

Congratulations again to winners in the Product categories in the 15th annual Best in Biz Awards: Addigy Adobe ADP Aha! Applied Systems Asensus Surgical Atombeam Technologies Aviatrix BrainChip DataDocks Deako Dynamo Software Fidelity Investments First Internet Bank GreenShield iHealth Labs...

Frangipani

Top 20

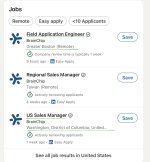

Something I’ve always found very odd is that the job openings listed on the BrainChip website tend to differ from those listed by our company on LinkedIn. Why not post all open positions in both places?

There are currently two job openings listed on the BrainChip website, one of which has been up for ages, namely that for “Sales Director US/Bay Area”, which I first spotted almost a year ago, back in early January. (https://thestockexchange.com.au/threads/brn-discussion-ongoing.1/post-445809).

It has since been reposted several times. However, it appears no suitable candidate has yet been found.

A similar job ad was recently posted on LinkedIn, but this time round it was an opening for a “US Sales Manager” in Washington, DC.

Turns out, though, the description is more or less just a copy & paste from the “Sales Director US/Bay Area” job ad, with two marked differences:

“This position must be located on the East Coast.”

“Expectation to complete at least two contract/deal within your first year of employment.” (Note that for the “Sales Director US/Bay Area” position it was merely one - whoever did the copy & paste and then made the changes evidently forgot to put “contract/deal” into the plural!)

The job description itself refers to the position being that of a “Sales Manager/Director”, adding “This position is a pivotal member of the sales team, reporting directly to the VP of Global Sales”. That phrase was actually taken verbatim from the “Sales Director US/Bay Area job ad”. Weirdly, it even says under “Market Expansion”: “Develop and implement strategic sales plans to achieve sales targets and expand BrainChip's IP adoption in the US/Bay Area market.”

So is it just sloppy proofreading once again or is this new East Coast-based position actually meant to be a replacement for the yet-to-be-filled role of “Sales Director US/Bay Area” (presently still listed as open on the BrainChip website) rather than an additional sales position lower in hierarchy?

Which in turn would beg the question: Why the sudden shift of focus from Silicon Valley to Washington, DC? Is it the geographical closeness to the seat of the US Government and/or to defense or civilian government contractors in the Beltway (ie Virginia and Maryland) that is so enticing?

Or has our management team made out a new market opportunity represented by specific (other) industries/companies located (primarily) on the East Coast?

Some readers might readily associate “East Coast” and “company” with Boston Dynamics, but as I’ve mentioned before, Boston Dynamics have their own Robotics and AI Institute (https://rai-inst.com), with plenty of AI experts in their two offices in Cambridge, MA and Zurich, Switzerland. They would know how to reach out for assistance if needed.

www.linkedin.com

www.linkedin.com

BrainChip

Washington, DC · Reposted 1 week ago · Over 100 applicants

Promoted by hirer · Actively reviewing applicants

$100K/yr - $175K/yr + Stock, Commission

Remote

Full-time

The Sales Manager/Director will spearhead the sales initiatives of BrainChip Inc. in the US, focusing on expanding market share, fostering customer relationships, and driving revenue growth. This position is a pivotal member of the sales team, reporting directly to the VP of Business Dev/Sales.

This position must be located on the East Coast.

ESSENTIAL JOB DUTIES AND RESPONSIBILITIES:

QUALIFICATIONS:

To perform this job successfully, an individual must be able to perform each essential duty effectively and professionally. The requirements listed below are representative of the knowledge, skills, and abilities required. Reasonable accommodations may be made to enable individuals with disabilities to perform the essential functions.

Education/Experience:

Other Skills and Abilities:

Employer-provided

Also, earlier today, another East Coast-based job got advertised: that of a Field Application Engineer in the Greater Boston Area:

www.linkedin.com

www.linkedin.com

www.linkedin.com

www.linkedin.com

BrainChip

Greater Boston · 10 hours ago · 11 applicants

Promoted by hirer · Company review time is typically 1 week

$100K/yr - $160K/yr + Bonus, Stock

*This position is a remote position. This person must be located on the East Coast.

The Field Applications Engineer will be the primary technical interface between BrainChip Sales and Engineering Members and to support current and future customers. This role will be responsible for post-sales evaluations, system design and integrations, and customer troubleshooting. In addition, the Field Applications Engineer will provide end-user facing documentation in the form of application notes, tutorials, and trainings. You must be able to communicate effectively at the engineering level with customers, understand their objectives and needs to develop and articulate solutions to address customers’ requirements.

*Please note: We are not engaging with external recruiting agencies for this role at this time.

About the Role

ESSENTIAL JOB DUTIES AND RESPONSIBILITIES:

Qualifications

To perform this job successfully, an individual must be able to perform each essential duty satisfactorily. The requirements listed below are representative of the knowledge, skill, and ability required. Reasonable accommodations may be made to enable individuals with disabilities to perform the essential functions.

Education/Experience:

Preferred Skills

At BrainChip, we hire based on potential, performance, and alignment with our mission to shape the future of intelligent edge computing. We are committed to providing a fair and respectful workplace where individuals are evaluated on their qualifications and contributions. Employment decisions are made without regard to race, color, religion, sex, national origin, age, disability, or any other status protected by applicable law.

We value the diverse perspectives and experiences that help drive innovation in neuromorphic AI and welcome applicants from all backgrounds who share our passion for advancing technology that matters.

Employer-provided

Another recent addition is the job opening for “Director of Software Engineering” (“This is a hybrid role, in our Laguna Hills, CA office 2x-3x a week.”), with references to Akida 3.

“We are seeking a seasoned Principal Software Architect with 7+ years of deep systems software experience to own the full developer platform for our 3rd-generation Neural Processing Unit (NPU).”

www.linkedin.com

www.linkedin.com

BrainChip

https://www.linkedin.com/jobs/view/4341105585/?alternateChannel=search&eBP=NOT_ELIGIBLE_FOR_CHARGING&refId=2TxkwqNA2JUFjT3ZIPm0Kw==&trackingId=/qMfRMNw7yOuGwhThqqZkw==&trk=d_flagship3_search_srp_jobs

Laguna Hills, CA · Reposted 16 hours ago · 85 applicants

Promoted by hirer · Actively reviewing applicants

$100K/yr - $225K/yr + Bonus, Stock

Hybrid

Full-time

*We are not accepting outside agencies at this time*

BrainChip is pioneering neuromorphic edge AI processors that deliver ultra-low-power intelligence at the point of data creation. We are seeking a seasoned Principal Software Architect with 7+ years of deep systems software experience to own the full developer platform for our 3rd-generation Neural Processing Unit (NPU).

Reporting directly to the CTO and partnering closely with our internal compiler lead, you will architect, integrate, and deliver a production-grade software ecosystem—including a VS Code-based IDE with embedded LLM assistance, system-level profiling, target-side bootloader, RTOS integration, and advanced debugging—all built atop mature open-source foundations and our in-house compiler.

Your mission: assemble together a seamless, best-in-class developer experience that hides complexity and accelerates adoption of BrainChip’s unique neuromorphic hardware.

This is a hybrid role, in our Laguna Hills, CA office 2x-3x a week.

Key Responsibilities

1.Developer Platform Architecture & Integration

2.VS Code IDE with LLM-Powered Developer Assistance

3. System-Level Profiling & Performance Observability

4. Target-Side Bootloader & RTOS Integration

5. Debugging & Hardware-in-the-Loop Workflow

6. Hardware-Software Co-Design Feedback Loop

Qualifications

Experience:

– Debug & Profiling: GDB/LLDB extensions, OpenOCD, eBPF, perf, hardware trace

(ETM/HTM).

– RTOS & Embedded: Zephyr/FreeRTOS, device trees, linker scripts, bare-metal bring-up.

– Build & Packaging: CMake, Ninja, Yocto, Debian packaging, CI/CD.

– Languages: Expert in C/C++, Rust; strong in Python, TypeScript.

– Compiler Integration: LLVM/MLIR tooling, plugin architecture, pass management

Preferred Qualifications:

Employer-provided

There are currently two job openings listed on the BrainChip website, one of which has been up for ages, namely that for “Sales Director US/Bay Area”, which I first spotted almost a year ago, back in early January. (https://thestockexchange.com.au/threads/brn-discussion-ongoing.1/post-445809).

It has since been reposted several times. However, it appears no suitable candidate has yet been found.

A similar job ad was recently posted on LinkedIn, but this time round it was an opening for a “US Sales Manager” in Washington, DC.

Turns out, though, the description is more or less just a copy & paste from the “Sales Director US/Bay Area” job ad, with two marked differences:

“This position must be located on the East Coast.”

“Expectation to complete at least two contract/deal within your first year of employment.” (Note that for the “Sales Director US/Bay Area” position it was merely one - whoever did the copy & paste and then made the changes evidently forgot to put “contract/deal” into the plural!)

The job description itself refers to the position being that of a “Sales Manager/Director”, adding “This position is a pivotal member of the sales team, reporting directly to the VP of Global Sales”. That phrase was actually taken verbatim from the “Sales Director US/Bay Area job ad”. Weirdly, it even says under “Market Expansion”: “Develop and implement strategic sales plans to achieve sales targets and expand BrainChip's IP adoption in the US/Bay Area market.”

So is it just sloppy proofreading once again or is this new East Coast-based position actually meant to be a replacement for the yet-to-be-filled role of “Sales Director US/Bay Area” (presently still listed as open on the BrainChip website) rather than an additional sales position lower in hierarchy?

Which in turn would beg the question: Why the sudden shift of focus from Silicon Valley to Washington, DC? Is it the geographical closeness to the seat of the US Government and/or to defense or civilian government contractors in the Beltway (ie Virginia and Maryland) that is so enticing?

Or has our management team made out a new market opportunity represented by specific (other) industries/companies located (primarily) on the East Coast?

Some readers might readily associate “East Coast” and “company” with Boston Dynamics, but as I’ve mentioned before, Boston Dynamics have their own Robotics and AI Institute (https://rai-inst.com), with plenty of AI experts in their two offices in Cambridge, MA and Zurich, Switzerland. They would know how to reach out for assistance if needed.

BrainChip hiring US Sales Manager in Washington, DC | LinkedIn

Posted 12:00:46 AM. The Sales Manager/Director will spearhead the sales initiatives of BrainChip Inc. in the US…See this and similar jobs on LinkedIn.

BrainChip

US Sales Manager

Washington, DC · Reposted 1 week ago · Over 100 applicants

Promoted by hirer · Actively reviewing applicants

$100K/yr - $175K/yr + Stock, Commission

Remote

Full-time

About the job

The Sales Manager/Director will spearhead the sales initiatives of BrainChip Inc. in the US, focusing on expanding market share, fostering customer relationships, and driving revenue growth. This position is a pivotal member of the sales team, reporting directly to the VP of Business Dev/Sales.

This position must be located on the East Coast.

ESSENTIAL JOB DUTIES AND RESPONSIBILITIES:

- Market Expansion: Develop and implement strategic sales plans to achieve sales targets and expand BrainChip's IP adoption in the US/Bay Area market.

- Customer Relationship Management: Build and maintain strong relationships with key SOC customers, understanding their needs and providing tailored IP solutions.

- Sales Strategy Execution: Drive the execution of sales strategies and initiatives to maximize IP contract revenue and market penetration.

- Lead Generation and Conversion: Identify new business opportunities and manage the entire sales process from lead generation to closing deals.

- Sales Forecasting and Reporting: Provide accurate sales forecasts and reports, analyzing sales data to identify trends and opportunities for improvement.

- Collaboration: Work closely with the marketing, product development, and customer support teams to ensure a cohesive approach to market needs and customer satisfaction.

- Product Knowledge: Maintain a deep understanding of BrainChip's product portfolio and technological advancements to effectively communicate value propositions to customers.

- Competitive Analysis: Monitor and analyze market trends and competitors' activities to adjust strategies accordingly.

- Travel: Domestic travel as required to meet with customers, attend industry events, and participate in training sessions.

- Expectation to complete at least two contract/deal within your first year of employment.

QUALIFICATIONS:

To perform this job successfully, an individual must be able to perform each essential duty effectively and professionally. The requirements listed below are representative of the knowledge, skills, and abilities required. Reasonable accommodations may be made to enable individuals with disabilities to perform the essential functions.

Education/Experience:

- Degree: Bachelor's Degree in Business, Marketing, Engineering, or a related field.

- Experience: Minimum of 7 years of experience in sales within the semiconductor or technology industry, with a proven track record of meeting or exceeding sales targets.

- Technical Knowledge: Familiarity with AI technologies and semiconductor ecosystems is highly desirable.

- Customer Management: Demonstrated ability to manage customer relationships and sales processes effectively.

Other Skills and Abilities:

- Relationship Building: Proven ability to establish and maintain strong relationships with customers and internal teams.

- Sales Acumen: Strong analytical skills to identify market trends, forecast sales, and develop strategic plans.

- Intellectual Property (IP) Sales Process: prior experience managing the sale of IP Licenses, Contracts and Royalty arrangements.

- Communication: Excellent verbal and written communication skills, with the ability to present effectively to diverse audiences.

- Project Management: Experience in managing complex sales projects and coordinating with multiple stakeholders.

- Problem-Solving: Strong problem-solving abilities with a proactive and solution-oriented approach.

- Multitasking: Ability to prioritize and manage multiple tasks efficiently in a fast-paced environment.

Employer-provided

Pay range in Washington, DC

Exact compensation may vary based on skills, experience, and location.Base salary

$100,000/yr - $175,000/yrAdditional compensation types

Stock, CommissionAlso, earlier today, another East Coast-based job got advertised: that of a Field Application Engineer in the Greater Boston Area:

#hiring | BrainChip

We're #hiring a new Field Application Engineer in Greater Boston. Apply today or share this post with your network.

BrainChip hiring Field Application Engineer in Greater Boston | LinkedIn

Posted 12:31:08 AM. *This position is a remote position. This person must be located on the East Coast. The Field…See this and similar jobs on LinkedIn.

BrainChip

Field Application Engineer

Greater Boston · 10 hours ago · 11 applicants

Promoted by hirer · Company review time is typically 1 week

$100K/yr - $160K/yr + Bonus, Stock

About the job

*This position is a remote position. This person must be located on the East Coast.

The Field Applications Engineer will be the primary technical interface between BrainChip Sales and Engineering Members and to support current and future customers. This role will be responsible for post-sales evaluations, system design and integrations, and customer troubleshooting. In addition, the Field Applications Engineer will provide end-user facing documentation in the form of application notes, tutorials, and trainings. You must be able to communicate effectively at the engineering level with customers, understand their objectives and needs to develop and articulate solutions to address customers’ requirements.

*Please note: We are not engaging with external recruiting agencies for this role at this time.

About the Role

ESSENTIAL JOB DUTIES AND RESPONSIBILITIES:

- Work with Sales and Engineering members, to support customers and help develop technical solutions for Brainchip’s Akida Neuromorphic System-on-Chip (NSoC).

- Understand customer’s technical requirements & propose solutions and manage projects related to customer activity resolve any customer challenges.

- Be the customer’s trusted advisor and main interface between Customers and BrainChip.

- Ability to understand and present complex technical requirements, problems, and solutions concisely in verbal and written communications.

- Know and understand ASIC and Systems development process and experience Schematics and CAD drawings.

Qualifications

To perform this job successfully, an individual must be able to perform each essential duty satisfactorily. The requirements listed below are representative of the knowledge, skill, and ability required. Reasonable accommodations may be made to enable individuals with disabilities to perform the essential functions.

Education/Experience:

- Bachelor’s degree or Master’s Degree in Computer Science, Electrical Engineering or equivalent.

- Five to ten years of technical pre-sales and / or customer support experience.

- Strong experience with API programming, scripting languages, Linux, Windows Server, and PC/Server drivers.

- Experience with Video Management System (VMS), Video Analytics, APIs from Milestone, Genentec, OnSSI, or others.

- Understanding of Video Codec Technology (VLC, Intel Quick Sync Video).

- Strong experience in at least one application field CPUs, Tools and methodologies and/or machine learning.

- Broad understanding of Machine Learning, Deep Learning, CNN, and Neuromorphic Computing.

- Strong verbal and written communication skills.

- Strong teamwork and interpersonal skills and analytical skills.

- Desire to be involved in a diverse and creative work environment.

- Must be a self-starter with mindset for growth and real passion for continuous learning.

Preferred Skills

- Software QA/Debug experience.

- Board design and development.

- FPGA experience.

- Machine Learning, Artificial Neural Network Programming.

At BrainChip, we hire based on potential, performance, and alignment with our mission to shape the future of intelligent edge computing. We are committed to providing a fair and respectful workplace where individuals are evaluated on their qualifications and contributions. Employment decisions are made without regard to race, color, religion, sex, national origin, age, disability, or any other status protected by applicable law.

We value the diverse perspectives and experiences that help drive innovation in neuromorphic AI and welcome applicants from all backgrounds who share our passion for advancing technology that matters.

Employer-provided

Pay range in Greater Boston

Exact compensation may vary based on skills, experience, and location.Base salary

$100,000/yr - $160,000/yrAdditional compensation types

Bonus, StockAnother recent addition is the job opening for “Director of Software Engineering” (“This is a hybrid role, in our Laguna Hills, CA office 2x-3x a week.”), with references to Akida 3.

“We are seeking a seasoned Principal Software Architect with 7+ years of deep systems software experience to own the full developer platform for our 3rd-generation Neural Processing Unit (NPU).”

BrainChip hiring Director of Software Engineering in Laguna Hills, CA | LinkedIn

Posted 7:27:57 PM. *We are not accepting outside agencies at this time* BrainChip is pioneering neuromorphic edge AI…See this and similar jobs on LinkedIn.

BrainChip

https://www.linkedin.com/jobs/view/4341105585/?alternateChannel=search&eBP=NOT_ELIGIBLE_FOR_CHARGING&refId=2TxkwqNA2JUFjT3ZIPm0Kw==&trackingId=/qMfRMNw7yOuGwhThqqZkw==&trk=d_flagship3_search_srp_jobs

Director of Software Engineering

Laguna Hills, CA · Reposted 16 hours ago · 85 applicants

Promoted by hirer · Actively reviewing applicants

$100K/yr - $225K/yr + Bonus, Stock

Hybrid

Full-time

About the job

*We are not accepting outside agencies at this time*

BrainChip is pioneering neuromorphic edge AI processors that deliver ultra-low-power intelligence at the point of data creation. We are seeking a seasoned Principal Software Architect with 7+ years of deep systems software experience to own the full developer platform for our 3rd-generation Neural Processing Unit (NPU).

Reporting directly to the CTO and partnering closely with our internal compiler lead, you will architect, integrate, and deliver a production-grade software ecosystem—including a VS Code-based IDE with embedded LLM assistance, system-level profiling, target-side bootloader, RTOS integration, and advanced debugging—all built atop mature open-source foundations and our in-house compiler.

Your mission: assemble together a seamless, best-in-class developer experience that hides complexity and accelerates adoption of BrainChip’s unique neuromorphic hardware.

This is a hybrid role, in our Laguna Hills, CA office 2x-3x a week.

Key Responsibilities

1.Developer Platform Architecture & Integration

- Own end-to-end integration of our internal compiler into a unified toolchain with runtime, IDE, debugger, profiler, and deployment pipeline.

2.VS Code IDE with LLM-Powered Developer Assistance

3. System-Level Profiling & Performance Observability

4. Target-Side Bootloader & RTOS Integration

- Architect a secure, minimal target bootloader supporting:

5. Debugging & Hardware-in-the-Loop Workflow

- Extend GDB/LLDB with NPU-specific commands for cycle-accurate stepping, event tracing, and state inspection (membranes, synapses, queues).

- Support JTAG, SWD, and OTA debug with non-intrusive hardware tracing.

6. Hardware-Software Co-Design Feedback Loop

- Work hand-in-hand with the CTO and silicon team to expose hardware capabilities via software abstractions.

- Use profiling and simulation data to influence microarchitecture (e.g., memory tiling, sparsity engines, event routing).

Qualifications

- Education: BS in Computer Engineering, Computer Science, Electrical Engineering, or equivalent.

Experience:

- 7+ years in systems software; 3+ years integrating and productizing complex toolchains (compilers, IDEs, debuggers, profilers).

- Proven success shipping VS Code extensions or full developer platforms used by external teams desired.

- Deep experience with open-source integration and upstream contribution workflows.

- Technical Expertise in many of the following:

– Debug & Profiling: GDB/LLDB extensions, OpenOCD, eBPF, perf, hardware trace

(ETM/HTM).

– RTOS & Embedded: Zephyr/FreeRTOS, device trees, linker scripts, bare-metal bring-up.

– Build & Packaging: CMake, Ninja, Yocto, Debian packaging, CI/CD.

– Languages: Expert in C/C++, Rust; strong in Python, TypeScript.

– Compiler Integration: LLVM/MLIR tooling, plugin architecture, pass management

- Domain Fit: Experience with edge AI, neuromorphic, or ultra-low-power SoCs strongly preferred and/or intense curiosity and interest.

Preferred Qualifications:

- Upstream contributions to VS Code, LLVM, Zephyr, OpenOCD, or other large opensource projects.

- Experience with LLM integration in IDEs (Copilot, CodeLlama, custom fine-tuned models).

- Secure boot and firmware update systems in production devices.

- Patents or publications in developer tools, profiling, or edge AI deployment.

- Strong Background in robotics and embedded system

Employer-provided

Pay range in Laguna Hills, CA

Exact compensation may vary based on skills, experience, and location.Base salary

$100,000/yr - $225,000/yrAdditional compensation types

Bonus, StockAttachments

Worker122

Regular

Have a look!

itso14605, thanks THAT was really worth watching!

Frangipani

Top 20

Update to GitHub last several hours.

Just on a model but this one was interesting I thought given its use of TENNs

Brainchip-Inc/models

An extension of Akida model zoo with pre-trained models

FallVision: Neuromorphic Fall Detection using Akida

Source

Video-based fall detection models on BrainChip's Akida neuromorphic hardware, achieving 98%+ accuracy with ultra-low power consumption.

Environment

tensorflow==2.15.1

numpy==1.26.4

keras==2.15.1

akida==2.14.0

akida-models==1.7

cnn2snn==2.14.0

quantizeml==0.18.1

pandas>=1.3.0

scipy>=1.7.0

matplotlib>=3.5.0

tqdm

rarfile

h5py>=3.6.0

Pillow>=9.0.0

tensorboard>=2.10.0

References

- This model uses Brainchip Spatiotemporal TENNs.

Could that update on GitHub possibly be connected to our so far rather secretive partner MulticoreWare, a San Jose-headquartered software development company?

Their name popped up on our website under “Enablement Partners” in early February without any further comment (https://thestockexchange.com.au/threads/brn-discussion-ongoing.1/post-450082).

Three months later, I spotted MulticoreWare’s VP of Sales & Business Development visiting the BrainChip booth at the Andes RISC V Con in San Jose:

“The gentleman standing next to Steve Brightfield is Muhammad Helal, VP Sales & Business Development of MulticoreWare, the only Enablement Partner on our website that to this day has not officially been announced.”

https://thestockexchange.com.au/threads/brn-discussion-ongoing.1/post-459763

But so far crickets from either company…

MulticoreWare still doesn’t even list us as a partner on their website:

Our Partners | MulticoreWare

Our clients and partners span from silicon platform partners to edge sensor makers to Tier-1 to OEMs in various verticals. We're grateful for our partner's assistance.

multicorewareinc.com

multicorewareinc.com

Neither does BrainChip get a mention anywhere in the below 11 December article titled “Designing Ultra-Low-Power Vision Pipelines on Neuromorphic Hardware - Building Real-Time Elderly Assistance with Neuromorphic hardware”, although “TENNs” is a give-away to us that the neuromorphic AI architecture referred to is indeed Akida.

What I find confusing, though, is that this neuromorphic AI architecture should consequently be Akida 2.0, given that the author is referring to TENNs, which Akida 1.0 doesn’t support. But then of course we do not yet have Akida 2.0 silicon.

However, at the same time it sounds as if the MulticoreWare researchers used physical neuromorphic hardware, which means it must have been an AKD1000 card:

“In the above demo, we have deployed a complete vision pipeline running seamlessly on a Raspberry Pi with the neuromorphic accelerator attached at the PCIE slot, demonstrating portability and practical deployment validating real-time, low-power AI at the edge.”

By the way, also note the following quote which helps to explain why the adoption of neuromorphic technology takes so much longer as if it were a simple plug-and-play solution:

“Developing models for neuromorphic AI requires more than porting existing architectures […] In short, building for neuromorphic acceleration means starting from the ground up balancing accuracy, efficiency, and strict design rules to unlock the promise of real-time, ultra-low-power AI at the edge”

Designing Ultra-Low-Power Vision Pipelines on Neuromorphic Hardware - MulticoreWare

As AI continues to advance at an unprecedented pace, its growing complexity often demands powerful hardware and high energy resources.

multicorewareinc.com

multicorewareinc.com

December 11, 2025

Author

Reshi Krish is a software engineer in the Platforms and Compilers Technical Unit at MulticoreWare, focused on building ultra-efficient AI pipelines for resource-constrained platforms. She specializes in optimizing and deploying AI across diverse hardware environments, leveraging techniques like quantization, pruning, and runtime optimization. Her work spans optimizing linear algebra libraries, embedded systems, and edge AI applications.

Introduction: Driving Innovation Beyond Power Constraints

As AI continues to advance at an unprecedented pace, its growing complexity often demands powerful hardware and high energy resources. However, when deploying AI solutions to the edge we look for ultra-efficient hardware which can run utilizing the least amount of energy possible and this introduces its own engineering challenges. ARM Cortex-M Microcontrollers (MCUs) and similar low-power processors have tight compute and memory limits, making optimizations like quantization, pruning, and lightweight runtimes critical for real-time performance. These challenges on the other hand are inspiring innovative solutions that make intelligence more accessible, efficient, and sustainable.At MulticoreWare, we’ve been exploring multiple paths to push more intelligence onto these constrained devices. This exploration led us to neuromorphic AI architectures and specialized neuromorphic hardware which provides ultra-low-power inference by mimicking the brain’s event-driven processing. We saw the novelty of this framework and aimed to combine this with our deep MCU experience for opening new ways to deliver always-on AI across medical, smart home, and industrial segments.

Designing for Neuromorphic Hardware

The neuromorphic AI framework we had identified utilized a novel type of neural networks Temporal Event-based Neural Networks (TENNS). TENNs employs a state-space architecture that processes events dynamically rather than at fixed intervals, skipping idle periods to minimize energy and memory usage. This design enables real-time inference on milliwatts of power, making it ideal for edge deployments.Developing models for neuromorphic AI requires more than porting existing architectures. The framework which we have utilised mandates full int8 quantization and adherence to strict architectural constraints. Only a limited set of layers is supported, and models must follow rigid sequences for compatibility. These restrictions often necessitate significant redesigns, including modification of model architecture, replacing unsupported activations (e.g., LeakyReLU → ReLU) and simplifying branched topologies. Many deep learning features like multi-input/output models are also not supported, requiring developers to implement workarounds or redesign models entirely.

In short, building for neuromorphic acceleration means starting from the ground up balancing accuracy, efficiency, and strict design rules to unlock the promise of real-time, ultra-low-power AI at the edge.

Engineering Real-Time Elderly Assistance on the Edge

To demonstrate the potential of neuromorphic AI, we developed a computer vision based elderly assistance system capable of detecting critical human activities such as sitting, walking, lying down, or falling all in real time running on extremely low power hardware.The goal was simple yet ambitious:

To deliver a fully on-device, low-power AI pipeline that continuously monitors and interprets human actions while maintaining user privacy and operational efficiency even in resource-limited environments.

However, due to frameworks architectural constraints, certain models such as pose estimation, could not be fully supported. To overcome this, we adopted a hybrid approach combining neuromorphic and conventional compute resources:

- Neuromorphic Hardware: Executes object detection and activity classification using specialized models.

- CPU (Tensorflow Lite): Handles pose estimation and intermediate feature extraction.

This design maintained functionality while ensuring power-efficient on the edge inference. Our modular vision pipeline leverages neuromorphic acceleration for detection and classification, with pose estimation being run on the host device.

Results: Intelligent, Low-Power Assistance at the Edge

In the above demo, we have deployed a complete vision pipeline running seamlessly on a Raspberry Pi with the neuromorphic accelerator attached at the PCIE slot, demonstrating portability and practical deployment validating real-time, low-power AI at the edge. This system continuously identifies and classifies user activities in real time, instantly detecting events such as falls or help gestures and triggering immediate alerts. All the processing required was achieved entirely at the edge ensuring privacy and responsiveness in safety-critical scenarios.The neuromorphic architecture consumes only a fraction of the power required by conventional deep learning pipelines, while maintaining consistent inference speeds and robust performance.

Application Snapshot:

- Ultra-low power consumption

- Portable Raspberry Pi + neuromorphic hardware setup

- End to end application running on the edge hardware

Our Playbook for Making Edge AI Truly Low-Power

MulticoreWare applies deep technical expertise across emerging low-power compute ecosystems, enabling AI to run efficiently on resource-constrained platforms. Our approach combines:

Broader MCU AI Applications: Industrial, Smart Home & Smart City

With healthcare leading the shift toward embedded-first AI, smart homes, industrial systems, and smart cities are rapidly following. Applications like quality inspection, predictive maintenance, robotic assistance, home security, and occupancy sensing increasingly require AI that runs directly on MCU-class, low-power edge processors.MulticoreWare’s real-time inference framework for Arm Cortex-M devices supports this transition through highly optimised pipelines including quantisation, pruning, CMSIS-NN kernel tuning, and memory-tight execution paths tailored for constrained MCUs. This enables OEMs to deploy workloads such as wake-word spotting, compact vision models, and sensor-level anomaly detection, allowing even the smallest devices to run intelligent features without relying on external compute.

Conclusion: Redefining Intelligence Beyond the Cloud

The convergence of AI and embedded computing marks a defining moment in how intelligence is designed, deployed, and scaled. By enabling lightweight, power-efficient AI directly at the edge, MulticoreWare empowers customers across healthcare, industrial, and smart city domains to achieve faster response times, higher reliability, and reduced energy footprints.As the boundary between compute and intelligence continues to fade, MulticoreWare’s Edge AI enablement across MCU and embedded platforms ensures that our partners stay ahead, building the foundation for a truly decentralised, real-time intelligence beyond the cloud.

To learn more about MulticoreWare’s edge AI initiatives, write to us at info@multicorewareinc.com.

#gaisler25yearsinspace | Frontgrade Gaisler

We are proud to announce Sean Hehir, Chief Executive Officer of BrainChip, as one of our speakers at Gaisler's First 25 Years in Space event in February 2026. #Gaisler25YearsInSpace

Thanks Frangipani, Enablement partner infers an intention to integrate our products with theirs. As you say they talk about TENNs. Maybe waiting for Gen 2 and TENNs or looking at bringing in TENNs solo?Could that update on GitHub possibly be connected to our so far rather secretive partner MulticoreWare, a San Jose-headquartered software development company?

Their name popped up on our website under “Enablement Partners” in early February without any further comment (https://thestockexchange.com.au/threads/brn-discussion-ongoing.1/post-450082).

Three months later, I spotted MulticoreWare’s VP of Sales & Business Development visiting the BrainChip booth at the Andes RISC V Con in San Jose:

“The gentleman standing next to Steve Brightfield is Muhammad Helal, VP Sales & Business Development of MulticoreWare, the only Enablement Partner on our website that to this day has not officially been announced.”

https://thestockexchange.com.au/threads/brn-discussion-ongoing.1/post-459763

But so far crickets from either company…

MulticoreWare still doesn’t even list us as a partner on their website:

Our Partners | MulticoreWare

Our clients and partners span from silicon platform partners to edge sensor makers to Tier-1 to OEMs in various verticals. We're grateful for our partner's assistance.multicorewareinc.com

View attachment 93744

Neither does BrainChip get a mention anywhere in the below 11 December article titled “Designing Ultra-Low-Power Vision Pipelines on Neuromorphic Hardware - Building Real-Time Elderly Assistance with Neuromorphic hardware”, although “TENNs” is a give-away to us that the neuromorphic AI architecture referred to is indeed Akida.

What I find confusing, though, is that this neuromorphic AI architecture should consequently be Akida 2.0, given that the author is referring to TENNs, which Akida 1.0 doesn’t support. But then of course we do not yet have Akida 2.0 silicon.

However, at the same time it sounds as if the MulticoreWare researchers used physical neuromorphic hardware, which means it must have been an AKD1000 card:

“In the above demo, we have deployed a complete vision pipeline running seamlessly on a Raspberry Pi with the neuromorphic accelerator attached at the PCIE slot, demonstrating portability and practical deployment validating real-time, low-power AI at the edge.”

By the way, also note the following quote which helps to explain why the adoption of neuromorphic technology takes so much longer as if it were a simple plug-and-play solution:

“Developing models for neuromorphic AI requires more than porting existing architectures […] In short, building for neuromorphic acceleration means starting from the ground up balancing accuracy, efficiency, and strict design rules to unlock the promise of real-time, ultra-low-power AI at the edge”

Designing Ultra-Low-Power Vision Pipelines on Neuromorphic Hardware - MulticoreWare

As AI continues to advance at an unprecedented pace, its growing complexity often demands powerful hardware and high energy resources.multicorewareinc.com

View attachment 93741

December 11, 2025

Author

Reshi Krish is a software engineer in the Platforms and Compilers Technical Unit at MulticoreWare, focused on building ultra-efficient AI pipelines for resource-constrained platforms. She specializes in optimizing and deploying AI across diverse hardware environments, leveraging techniques like quantization, pruning, and runtime optimization. Her work spans optimizing linear algebra libraries, embedded systems, and edge AI applications.

Introduction: Driving Innovation Beyond Power Constraints

As AI continues to advance at an unprecedented pace, its growing complexity often demands powerful hardware and high energy resources. However, when deploying AI solutions to the edge we look for ultra-efficient hardware which can run utilizing the least amount of energy possible and this introduces its own engineering challenges. ARM Cortex-M Microcontrollers (MCUs) and similar low-power processors have tight compute and memory limits, making optimizations like quantization, pruning, and lightweight runtimes critical for real-time performance. These challenges on the other hand are inspiring innovative solutions that make intelligence more accessible, efficient, and sustainable.

At MulticoreWare, we’ve been exploring multiple paths to push more intelligence onto these constrained devices. This exploration led us to neuromorphic AI architectures and specialized neuromorphic hardware which provides ultra-low-power inference by mimicking the brain’s event-driven processing. We saw the novelty of this framework and aimed to combine this with our deep MCU experience for opening new ways to deliver always-on AI across medical, smart home, and industrial segments.

Designing for Neuromorphic Hardware

The neuromorphic AI framework we had identified utilized a novel type of neural networks Temporal Event-based Neural Networks (TENNS). TENNs employs a state-space architecture that processes events dynamically rather than at fixed intervals, skipping idle periods to minimize energy and memory usage. This design enables real-time inference on milliwatts of power, making it ideal for edge deployments.

Developing models for neuromorphic AI requires more than porting existing architectures. The framework which we have utilised mandates full int8 quantization and adherence to strict architectural constraints. Only a limited set of layers is supported, and models must follow rigid sequences for compatibility. These restrictions often necessitate significant redesigns, including modification of model architecture, replacing unsupported activations (e.g., LeakyReLU → ReLU) and simplifying branched topologies. Many deep learning features like multi-input/output models are also not supported, requiring developers to implement workarounds or redesign models entirely.

In short, building for neuromorphic acceleration means starting from the ground up balancing accuracy, efficiency, and strict design rules to unlock the promise of real-time, ultra-low-power AI at the edge.

Engineering Real-Time Elderly Assistance on the Edge

To demonstrate the potential of neuromorphic AI, we developed a computer vision based elderly assistance system capable of detecting critical human activities such as sitting, walking, lying down, or falling all in real time running on extremely low power hardware.

The goal was simple yet ambitious:

To deliver a fully on-device, low-power AI pipeline that continuously monitors and interprets human actions while maintaining user privacy and operational efficiency even in resource-limited environments.

However, due to frameworks architectural constraints, certain models such as pose estimation, could not be fully supported. To overcome this, we adopted a hybrid approach combining neuromorphic and conventional compute resources:

- Neuromorphic Hardware: Executes object detection and activity classification using specialized models.

- CPU (Tensorflow Lite): Handles pose estimation and intermediate feature extraction.

This design maintained functionality while ensuring power-efficient on the edge inference. Our modular vision pipeline leverages neuromorphic acceleration for detection and classification, with pose estimation being run on the host device.

View attachment 93742

View attachment 93743

Results: Intelligent, Low-Power Assistance at the Edge

In the above demo, we have deployed a complete vision pipeline running seamlessly on a Raspberry Pi with the neuromorphic accelerator attached at the PCIE slot, demonstrating portability and practical deployment validating real-time, low-power AI at the edge. This system continuously identifies and classifies user activities in real time, instantly detecting events such as falls or help gestures and triggering immediate alerts. All the processing required was achieved entirely at the edge ensuring privacy and responsiveness in safety-critical scenarios.

The neuromorphic architecture consumes only a fraction of the power required by conventional deep learning pipelines, while maintaining consistent inference speeds and robust performance.

Application Snapshot:

- Ultra-low power consumption

- Portable Raspberry Pi + neuromorphic hardware setup

- End to end application running on the edge hardware

Our Playbook for Making Edge AI Truly Low-Power

MulticoreWare applies deep technical expertise across emerging low-power compute ecosystems, enabling AI to run efficiently on resource-constrained platforms. Our approach combines:

Broader MCU AI Applications: Industrial, Smart Home & Smart City

With healthcare leading the shift toward embedded-first AI, smart homes, industrial systems, and smart cities are rapidly following. Applications like quality inspection, predictive maintenance, robotic assistance, home security, and occupancy sensing increasingly require AI that runs directly on MCU-class, low-power edge processors.

MulticoreWare’s real-time inference framework for Arm Cortex-M devices supports this transition through highly optimised pipelines including quantisation, pruning, CMSIS-NN kernel tuning, and memory-tight execution paths tailored for constrained MCUs. This enables OEMs to deploy workloads such as wake-word spotting, compact vision models, and sensor-level anomaly detection, allowing even the smallest devices to run intelligent features without relying on external compute.

Conclusion: Redefining Intelligence Beyond the Cloud

The convergence of AI and embedded computing marks a defining moment in how intelligence is designed, deployed, and scaled. By enabling lightweight, power-efficient AI directly at the edge, MulticoreWare empowers customers across healthcare, industrial, and smart city domains to achieve faster response times, higher reliability, and reduced energy footprints.

As the boundary between compute and intelligence continues to fade, MulticoreWare’s Edge AI enablement across MCU and embedded platforms ensures that our partners stay ahead, building the foundation for a truly decentralised, real-time intelligence beyond the cloud.

To learn more about MulticoreWare’s edge AI initiatives, write to us at info@multicorewareinc.com.

View attachment 93745

View attachment 93746

They are interested in Neuromorphic and show that they have definite ideas how it can by be used.

We have also listed Spanidea as an Enablement partner but they have not listed us. Very quiet there as well.

FJ-215

Regular

GrAI Matter@Diogenese

If you could find time, can you pls run your eye over this patent that just popped up by SNAP Inc, you know, Snapchat etc parent company.

The old "Brainchip Akida is a fast learner" article gets listed in the cited info amongst some other citations.

Any relevance as a nod to inspiration for this patent lodgement, cross over of thinking, design etc or just std protocol?

Cheers

View attachment 93738

View attachment 93739

Similar threads

- Replies

- 0

- Views

- 4K

- Replies

- 8

- Views

- 5K

- Replies

- 0

- Views

- 3K