Yes everything is possible. However they've been running their app now for many years and while they may well add to their app to make it better, they do use their own AI currently. I always thought it would be a perfect addition to their IP but they don't make money currently so may be reluctant to add more costs. But you gotta spend money to make money!"I asked Philip Daffas (CEO PCK) this some time back without a response".

This is no coincidence.

Whenever there are questions that make people feel uncomfortable they don't respond.

If it's a no, they usually say "no, we aren't using Akida".

I'm not saying they're using Akida, but interesting he didn't respond at all.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

Bravo

Meow Meow 🐾

Nice find. It doesn’t have to mean anything more than Tenstorrent is just using RISC-V architecture from SiFive, but I wonder if Jim Keller knows about Akida

Hopefully ! Judging from this extract (below), it looks like Jim Keller is aware of the significance of edge computing and sensors that do pre-processing which "naturally interfaces with the rest of this model".

BrainChip and SiFive Partner to Deploy AI/ML Technology at the Edge

BrainChip and SiFive partner to combined their respective technologies to offer chip designers optimized AI/ML compute at the edge.“Employing Akida, BrainChip’s specialized, differentiated AI engine, with high-performance RISC-V processors such as the SiFive Intelligence Series is a natural choice for companies looking to seamlessly integrate an optimized processor to dedicated ML accelerators that are a must for the demanding requirements of edge AI computing,” said Chris Jones, vice president, products at SiFive. “BrainChip is a valuable addition to our ecosystem portfolio”.

Tenstorrent Blackhole, Grendel, And Buda - A Scale Out Architecture For Sparsity, Conditional Execution, And Dynamic Routing

Tenstorrent is one of the leading AI startups with one of the most interesting architectures and software stacks.

Yes everything is possible. However they've been running their app now for many years and while they may well add to their app to make it better, they do use their own AI currently. I always thought it would be a perfect addition to their IP but they don't make money currently so may be reluctant to add more costs. But you gotta spend money to make money!

This is the Painchek patent:

US10398372B2 Pain assessment method and system

Applicants EPAT PTY LTD [AU]

Inventors HUGHES JEFF [AU]; HOTI KRESHNIK [AU]; ATEE MUSTAFA ABDUL WAHED [AU]

1. A method for determining an effect of a drug on pain experienced by a patient, the method comprising:

using a camera to capture positional information of at least one visible facial feature from the patient;

comparing, using a processor of a pain assessment system, the positional information of the at least one visible facial feature to predetermined data for the at least one visible facial feature to determine reference information that is indicative of a reference level of the pain experienced by the patient;

after administering the drug to the patient using the camera to capture second information indicative of the at least one visible facial feature from the patient;

comparing, using the processor, the second information to the predetermined data for the at least one visible facial feature to determine pain indicating information associated with a level of pain experienced by the patient after administering the drug to the patient;

comparing, using the processor, the pain indicating information with the reference information to determine the effect of the drug on the pain experienced by the patient; and

providing an alternative treatment to the patient for the pain experienced by the patient in response to determining that the effect of the drug is below a predetermined threshold.

...

9. A pain assessment system comprising:

an interface for receiving positional information concerning at least one visible facial feature that is indicative of a reference level of pain, and for receiving second information indicative of the at least one visible facial feature of a patient after administering a drug to the patient, the at least one visible facial feature being capable of indicating a pain level experienced by the patient, wherein the positional information and second information indicative of at least one visible facial feature of the patient are captured using a camera; and

a processor arranged to:

compare the positional information to predetermined data for the at least one visible facial feature to determine reference information that is indicative of the reference level of the pain experienced by the patient,

compare the received second information to the predetermined data for the at least one visible facial feature to determine pain indicating information associated with a level of pain experienced by the patient,

compare the determined pain indicating information with the reference information such that an effect of the drug on the pain experienced by the patient can be determined, and

determine an alternative treatment to the patient based on the determined effect of the drug on the pain experienced by the patient being below a predetermined threshold.

As you say, Painchek do not have the cash to invest in hardware, nor do they have a pressing need to do so.

Bravo

Meow Meow 🐾

This is the Painchek patent:

US10398372B2 Pain assessment method and system

Applicants EPAT PTY LTD [AU]

Inventors HUGHES JEFF [AU]; HOTI KRESHNIK [AU]; ATEE MUSTAFA ABDUL WAHED [AU]

View attachment 27837

View attachment 27836

1. A method for determining an effect of a drug on pain experienced by a patient, the method comprising:

using a camera to capture positional information of at least one visible facial feature from the patient;

comparing, using a processor of a pain assessment system, the positional information of the at least one visible facial feature to predetermined data for the at least one visible facial feature to determine reference information that is indicative of a reference level of the pain experienced by the patient;

after administering the drug to the patient using the camera to capture second information indicative of the at least one visible facial feature from the patient;

comparing, using the processor, the second information to the predetermined data for the at least one visible facial feature to determine pain indicating information associated with a level of pain experienced by the patient after administering the drug to the patient;

comparing, using the processor, the pain indicating information with the reference information to determine the effect of the drug on the pain experienced by the patient; and

providing an alternative treatment to the patient for the pain experienced by the patient in response to determining that the effect of the drug is below a predetermined threshold.

...

9. A pain assessment system comprising:

an interface for receiving positional information concerning at least one visible facial feature that is indicative of a reference level of pain, and for receiving second information indicative of the at least one visible facial feature of a patient after administering a drug to the patient, the at least one visible facial feature being capable of indicating a pain level experienced by the patient, wherein the positional information and second information indicative of at least one visible facial feature of the patient are captured using a camera; and

a processor arranged to:

compare the positional information to predetermined data for the at least one visible facial feature to determine reference information that is indicative of the reference level of the pain experienced by the patient,

compare the received second information to the predetermined data for the at least one visible facial feature to determine pain indicating information associated with a level of pain experienced by the patient,

compare the determined pain indicating information with the reference information such that an effect of the drug on the pain experienced by the patient can be determined, and

determine an alternative treatment to the patient based on the determined effect of the drug on the pain experienced by the patient being below a predetermined threshold.

As you say, Painchek do not have the cash to invest in hardware, nor do they have a pressing need to do so.

Here's an article from 2019 which shows the connection between PainCheck's inventors Hoti, Hughes, Mustafa and NVISO.

My parents were going to call me Hoti but they settled on Bravo instead.

Facing Up to Pain - Medical Forum

A WA invention is shaping up to be a game changer in assessing pain in those who can’t speak up for themselves.

Last edited:

equanimous

Norse clairvoyant shapeshifter goddess

For some reason I always picture your cat on the computer doing the BRN researchHere's an article from 2019 which shows the connection between PainCheck's inventors Hoti, Hughes, Mustafa and NVISO. My parents were going to call me Hoti but they settled on Bravo instead.

View attachment 27840

Facing Up to Pain - Medical Forum

A WA invention is shaping up to be a game changer in assessing pain in those who can’t speak up for themselves.mforum.com.au

Bravo

Meow Meow 🐾

Yes

Admittedly I pay him well below the award rates but I make up with it by showering him with cuddles and yummy treats.

For some reason I always picture your cat on the computer doing the BRN research

Admittedly I pay him well below the award rates but I make up with it by showering him with cuddles and yummy treats.

This is pure speculation on my behalf, but they recently did a cash raise to pay for, amongst other things, a “ core technology upgrade “Here's an article from 2019 which shows the connection between PainCheck's inventors Hoti, Hughes, Mustafa and NVISO. My parents were going to call me Hoti but they settled on Bravo instead.

View attachment 27840

Facing Up to Pain - Medical Forum

A WA invention is shaping up to be a game changer in assessing pain in those who can’t speak up for themselves.mforum.com.au

At first I was confused by the name of this handle but dont think our resident wordsmith here has a twitter account.

thought the same

Deadpool

Regular

Oscar Wilde quote - Imitation is the sincerest form of flattery that mediocrity can pay to greatness.thought the same

Bravo

Meow Meow 🐾

Xperi DTSTalk about the minotaur's cave Nothing for Xperi, nothing for DTS, so I tried CTO Petronel Bigioi - Found a few for FOTONATION LTD:

FotoNation is a wholly owned subsidiary of Xperi.

US11046327B2 System for performing eye detection and/or tracking

View attachment 22571

[0034] As further illustrated in FIG. 3, the system 302 may include a face detector component 316 , a control component 318 , and an eye tracking component 320 . The face detector component 316 may be configured to analyze the first image data 310 in order to determine a location of a face of a user. For example, the face detector component 316 may analyze the first image data 310 using one or more algorithms associated with face detection. The one or more algorithms may include, but are not limited to, neural network algorithm(s), Principal Component Analysis algorithm(s), Independent Component Analysis algorithms(s), Linear Discriminant Analysis algorithm(s), Evolutionary Pursuit algorithm(s), Elastic Bunch Graph Matching algorithm(s), and/or any other type of algorithm(s) that the face detector component 316 may utilize to perform face detection on the first image data 310 .

[0041] The eye tracking component 320 may be configured to analyze the second image data 312 in order to determine eye position and/or a gaze direction of the user. For example, the eye tracking component 320 may analyze the second image data 312 using one or more algorithms associated with eye tracking. The one or more algorithms may include, but are not limited to, neural network algorithm(s) and/or any other types of algorithm(s) associated with eye tracking.

Missed it by that much:

[0050] As described herein, a machine-learned model which may include, but is not limited to a neural network (e.g., You Only Look Once (YOLO) neural network, VGG, DenseNet, PointNet, convolutional neural network (CNN), stacked auto-encoders, deep Boltzmann machine (DBM), deep belief networks (DBN),), regression algorithm (e.g., ordinary least squares regression (OLSR), linear regression, logistic regression, stepwise regression, multivariate adaptive regression splines (MARS), locally estimated scatterplot smoothing (LOESS)), Bayesian algorithms (e.g., naïve Bayes, Gaussian naïve Bayes, multinomial naïve Bayes, average one-dependence estimators (AODE), Bayesian belief network (BNN), Bayesian networks), clustering algorithms (e.g., k-means, k-medians, expectation maximization (EM), hierarchical clustering), association rule learning algorithms (e.g., perceptron, back-propagation, Hopfield network, Radial Basis Function Network (RBFN)), supervised learning, unsupervised learning, semi-supervised learning, etc. Additional or alternative examples of neural network architectures may include neural networks such as ResNet50, ResNet101, VGG, DenseNet, PointNet, and the like. Although discussed in the context of neural networks, any type of machine-learning may be used consistent with this disclosure. For example, machine-learning algorithms may include, but are not limited to, regression algorithms, instance-based algorithms, Bayesian algorithms, association rule learning algorithms, deep learning algorithms, etc.

... no suggestion of a digital SNN SoC, or even an analog one.

However, they did make a CNN SoC:

WO2017129325A1 A CONVOLUTIONAL NEURAL NETWORK

Von Neumann rools!

View attachment 22576

A convolutional neural network (CNN) for an image processing system comprises an image cache responsive to a request to read a block of NxM pixels extending from a specified location within an input map to provide a block of NxM pixels at an output port. A convolution engine reads blocks of pixels from the output port, combines blocks of pixels with a corresponding set of weights to provide a product, and subjects the product to an activation function to provide an output pixel value. The image cache comprises a plurality of interleaved memories capable of simultaneously providing the NxM pixels at the output port in a single clock cycle. A controller provides a set of weights to the convolution engine before processing an input map, causes the convolution engine to scan across the input map by incrementing a specified location for successive blocks of pixels and generates an output map within the image cache by writing output pixel values to successive locations within the image cache.

Mercedes

Prophesee

Last edited:

Tothemoon24

Top 20

Hi TCThis is pure speculation on my behalf, but they recently did a cash raise to pay for, amongst other things, a “ core technology upgrade “

View attachment 27843

I once held Pck a couple of years ago , I’ve kept a close eye on their progress.

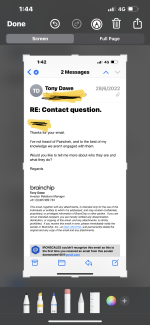

I sent an email to Tony Dawe last year .

This is the reply

Attachments

ok, maybe one day. Thanks @Tothemoon24Hi TC

I once held Pck a couple of years ago , I’ve kept a close eye on their progress.

I sent an email to Tony Dawe last year .

This is the reply

Bravo

Meow Meow 🐾

Xperi DTS

Mercedes

Prophesee

View attachment 27844

View attachment 27853

View attachment 27849

View attachment 27850

The other thing is it says DTS can be seen in Garmin's new Unified Cabin Experience. Garmin also have links with Cerence. Look it says embedded gaming: Atari for gaming without an internet connection!

AUTOMOTIVE

Wednesday, 4 January 2023, 6:00 am CST

Garmin unveils its Unified Cabin Experience at CES 2023

In-vehicle solution with personalized entertainment zones intuitively connects wireless passenger devices to real-time game streams, videos, music and more

FacebookTwitterEmailCopy LinkJanuary 4, 2023/PR Newswire—Garmin (NYSE: GRMN) will demonstrate its latest in-cabin solutions for the Automotive OEM (original equipment manufacturer) market at CES 2023 with an emphasis on technologies that unify multiple domains, touchscreens and wireless devices on a single SoC (system on chip). Featuring four infotainment touchscreens, instrument cluster, cabin monitoring system, wireless headphones, wireless gaming controllers, smartphones, and numerous entertainment options—all powered by a single Garmin multi-domain computing module—the demonstration addresses several key technical and user experience challenges for next generation multi-screen systems running on the Android Automotive operating system.

Preview the Garmin Unified Cabin™ demo in action here.

“Garmin’s CES demo highlights technological innovations that advance the capabilities of Android Automotive OS for delivering a multi-zone personalized experience that consumers are expecting,” said Matt Munn, Garmin Automotive OEM managing director. “There are enormous opportunities to integrate many types of features and technologies into a single, unified system, but our connected in-cabin experience goes beyond system integration with innovative new features and an improved user experience.”

The system is customizable for each passenger and easy to operate, utilizing UWB (Ultra-Wideband) positioning technology to automatically connect wireless devices to the appropriate display. A cabin monitoring camera identifies and unlocks each passenger’s personal user interface profile, enabling occupants to enjoy multiple personalized entertainment options including cloud-based blockbuster console/PC games that can be played over 5G connectivity, on-board games, and multiple streaming video platforms—all from some of the most popular names. With the new Cabin App feature, passengers can locate connected devices, control other displays and share video and audio content across multiple passenger zones.

Garmin would like to recognize the following OEM providers for their contributions to the Unified Cabin Experience:

- In-cabin sensing capabilities: Xperi’s DTS AutoSense™ platform uses advanced machine learning and a single camera to enable safety and experience features such as seat occupancy (including body pose), hands-on-wheel, activity and seatbelt detection, driver attention zones, driver distraction or occupant recognition.

- Audio streaming: Xperi’s DTS AutoStage™ hybrid radio solution provides a richer, more immersive radio listening experience. This feature automatically switches between a station’s over-the-air radio signal to its IP stream when traveling in and out of range, as well as providing station metadata, album art and more.

- Navigation: Mapbox enabled Garmin to accelerate the delivery of this demo in a fraction of the time. Mapbox Dash combines the company’s strengths in navigation, search and visualization, including AI and traffic data. This integration provides beautifully rendered and easily customizable interactive maps across all five displays of the system. The Garmin Unified Cabin Experience is also interoperable with other navigation systems.

- Embedded gaming: Atari integration transforms the entire vehicle into an arcade, allowing for family-friendly gaming without a data connection.

- Embedded software solutions: BlackBerry QNX Hypervisor and Neutrino RTOS incorporates best-in-class BlackBerry security technologies that safeguard users against system malfunctions, malware and cybersecurity breaches. These provide the necessary technology to power the industry’s next generation of products, while also supporting 64-bit ARMv8 computing platforms and Intel x86-64 architecture.

Garmin unveils its Unified Cabin Experience at CES 2023 - Garmin Newsroom

January 4, 2023/PR Newswire—Garmin (NYSE: GRMN) will demonstrate its latest in-cabin solutions for the Automotive OEM (original equipment manufacturer) market at CES 2023 with an emphasis on technologies that unify multiple domains, touchscreens and wireless devices on a single SoC (system on...

TechGirl

Founding Member

Great article on us about our Benchmarking.

www.edgeir.com

www.edgeir.com

Jan 23, 2023 | Abhishek Jadhav

CATEGORIES Edge Applications | Edge Computing News | Industry Standards

BrainChip, a provider of neuromorphic processors for edge AI on-chip processing, has published a white paper that examines the limitations of conventional AI performance benchmarks. The white paper also suggests additional metrics to consider when evaluating AI applications’ overall performance and efficiency in multi-modal edge environments.

The white paper, “Benchmarking AI inference at the edge: Measuring performance and efficiency for real-world deployments”, examines how neuromorphic technology can help reduce latency and power consumption while amplifying throughput. According to research cited by BrainChip, the benchmarking used to measure AI performance in today’s industry tends to focus heavily on TOPS metrics, which do not accurately depict real-world applications.

“While there’s been a good start, current methods of benchmarking for edge AI don’t accurately account for the factors that affect devices in industries such as automotive, smart homes and Industry 4.0,” said Anil Mankar, the chief development officer of BrainChip.

Recommended reading: Edge Impulse, BrainChip partner to accelerate edge AI development

BrainChip proposes that future benchmarking of AI edge performance should include application-based parameters. Additionally, it should emulate sensor inputs to provide a more realistic and complete view of performance and power efficiency.

“We believe that as a community, we should evolve benchmarks to continuously incorporate factors such as on-chip, in-memory computation, and model sizes to complement the latency and power metrics that are measured today,” Mankar added.

Recommended reading: BrainChip, Prophesee to deliver “neuromorphic” event-based vision systems for OEMs

BrainChip believes businesses can leverage this data to optimize AI algorithms with performance and efficiency for various industries, including automotive, smart homes and Industry 4.0.

Evaluating AI performance for automotive applications can be difficult due to the complexity of dynamic situations. One can create more responsive in-cabin systems by incorporating keyword spotting and image detection into benchmarking measures. On the other hand, when evaluating AI in smart home devices, one should prioritize measuring performance and accuracy for keyword spotting, object detection and visual wake words.

“Targeted Industry 4.0 inference benchmarks focused on balancing efficiency and power will enable system designers to architect a new generation of energy-efficient robots that optimally process data-heavy input from multiple sensors,” BrainChip explained.

BrainChip emphasizes the need for more effort to incorporate additional parameters in a comprehensive benchmarking system. The company suggests creating new benchmarks for AI interference performance that measure efficiency by evaluating factors such as latency, power and in-memory and (on-chip) computation.

BrainChip says new standards needed for edge AI benchmarking | Edge Industry Review

Benchmarking used to measure AI performance in today's industry tends to focus heavily on TOPS metrics, which do not accurately depict real-world applications.

BrainChip says new standards needed for edge AI benchmarking

Jan 23, 2023 | Abhishek Jadhav

CATEGORIES Edge Applications | Edge Computing News | Industry Standards

BrainChip, a provider of neuromorphic processors for edge AI on-chip processing, has published a white paper that examines the limitations of conventional AI performance benchmarks. The white paper also suggests additional metrics to consider when evaluating AI applications’ overall performance and efficiency in multi-modal edge environments.

The white paper, “Benchmarking AI inference at the edge: Measuring performance and efficiency for real-world deployments”, examines how neuromorphic technology can help reduce latency and power consumption while amplifying throughput. According to research cited by BrainChip, the benchmarking used to measure AI performance in today’s industry tends to focus heavily on TOPS metrics, which do not accurately depict real-world applications.

“While there’s been a good start, current methods of benchmarking for edge AI don’t accurately account for the factors that affect devices in industries such as automotive, smart homes and Industry 4.0,” said Anil Mankar, the chief development officer of BrainChip.

Recommended reading: Edge Impulse, BrainChip partner to accelerate edge AI development

Limitations of traditional edge AI benchmarking techniques

MLPerf is recognized as the benchmark system for measuring the performance and capabilities of AI workloads and inferences. While other organizations seek to add new standards for AI evaluations, they still use TOPS metrics. Unfortunately, these metrics fail to prove proper power consumption and performance in a real-world setting.BrainChip proposes that future benchmarking of AI edge performance should include application-based parameters. Additionally, it should emulate sensor inputs to provide a more realistic and complete view of performance and power efficiency.

“We believe that as a community, we should evolve benchmarks to continuously incorporate factors such as on-chip, in-memory computation, and model sizes to complement the latency and power metrics that are measured today,” Mankar added.

Recommended reading: BrainChip, Prophesee to deliver “neuromorphic” event-based vision systems for OEMs

Benchmarks in action: Measuring throughput and power consumption

BrainChip promotes a shift towards using application-specific parameters to measure AI inference capabilities. The new standard should use open-loop and closed-loop datasets to measure raw performance in real-world applications, such as throughput and power consumption.BrainChip believes businesses can leverage this data to optimize AI algorithms with performance and efficiency for various industries, including automotive, smart homes and Industry 4.0.

Evaluating AI performance for automotive applications can be difficult due to the complexity of dynamic situations. One can create more responsive in-cabin systems by incorporating keyword spotting and image detection into benchmarking measures. On the other hand, when evaluating AI in smart home devices, one should prioritize measuring performance and accuracy for keyword spotting, object detection and visual wake words.

“Targeted Industry 4.0 inference benchmarks focused on balancing efficiency and power will enable system designers to architect a new generation of energy-efficient robots that optimally process data-heavy input from multiple sensors,” BrainChip explained.

BrainChip emphasizes the need for more effort to incorporate additional parameters in a comprehensive benchmarking system. The company suggests creating new benchmarks for AI interference performance that measure efficiency by evaluating factors such as latency, power and in-memory and (on-chip) computation.

SERA2g

Founding Member

Nice one mate!Hi TC

I once held Pck a couple of years ago , I’ve kept a close eye on their progress.

I sent an email to Tony Dawe last year .

This is the reply

In August 2022 I emailed Adam Osseiran about a business I thought could benefit from akida technology. From my brief email, Adam and Tony were interested in meeting with the CEO, who I knew albeit not very well.

I had already spoken to the CEO about Brainchip and so made a 'warm' introduction by email and then left it to Tony and Adam to run with.

I did later get confirmation that they had met with the CEO but did not ask whether anything had come of it for obvious reasons. The CEO sent me an email afterwards thanking me for the introduction and said "we had a long call and are following up. Definitely tech we need to know about"

I suppose the point I'm making is the Brainchip team are more than happy to meet with prospective clients at the suggestion of shareholders, so if you have someone in your network you think would be valuable for Tony and the team to meet with, then have a chat with Tony and the client separately and provided they are both interested, send an introduction email to both parties.

You never know what may come of it!

Cheers

Colorado23

Regular

G'day legends.

Listened to an interesting podcast with a NZ lad who is a co founder of Neuro. I seem to remember one or many of you discussing Neuro but have reached out to Mr Ferguson to gauge his response in relation to Brainchip. He also coincidentally completed a Phd at CMU.

Listened to an interesting podcast with a NZ lad who is a co founder of Neuro. I seem to remember one or many of you discussing Neuro but have reached out to Mr Ferguson to gauge his response in relation to Brainchip. He also coincidentally completed a Phd at CMU.

Cyw

Regular

I don't think it is controlled as such. Don't forget we have at least 30M shares to be dumped into the market. Buyers won't rush in to buy.Here's a question to no one in particular.

Who benefits by having the share price tightly controlled at this level or even sub 60c?

Tech x

db1969oz

Regular

Surely a fair bit of today’s trading was fuelled by this? Relentless selling all afternoon!I don't think it is controlled as such. Don't forget we have at least 30M shares to be dumped into the market. Buyers won't rush in to buy.

Similar threads

- Replies

- 0

- Views

- 4K

- Replies

- 9

- Views

- 6K

- Replies

- 0

- Views

- 3K